Background – The uncontrollable surge of data

The web is getting inundated with gigabytes of data every second and this data flood is continuously rising as if Moor’s law also applies to data as well as to the microprocessor’s performance. By 2025 the total annual consumption of data is estimated to cross 181 zettabytes compared to 97 zettabytes in 2022.

Almost every single digital device captures some kind of data and transmits it to a corresponding data collection center for processing, analyzing, synthesizing, and getting integrated with other relevant types of data to produce new information. The decision-making process in every organization now heavily relies upon data-derived knowledge as data patterns in various context reveals prevalent world trends. This helps the decision-makers spot opportunities for their next actions or even leads to innovation.

This has created an oversize cloud of data in the web space – structured, or unstructured in every format conceivable. From the outset, it looks like anarchical chaos because the locations of these data formats keep changing, and one source point can collect multiple types of data. Such data alone as it is collected can not make any meaning. It has to be seen in its individual context to make sense.

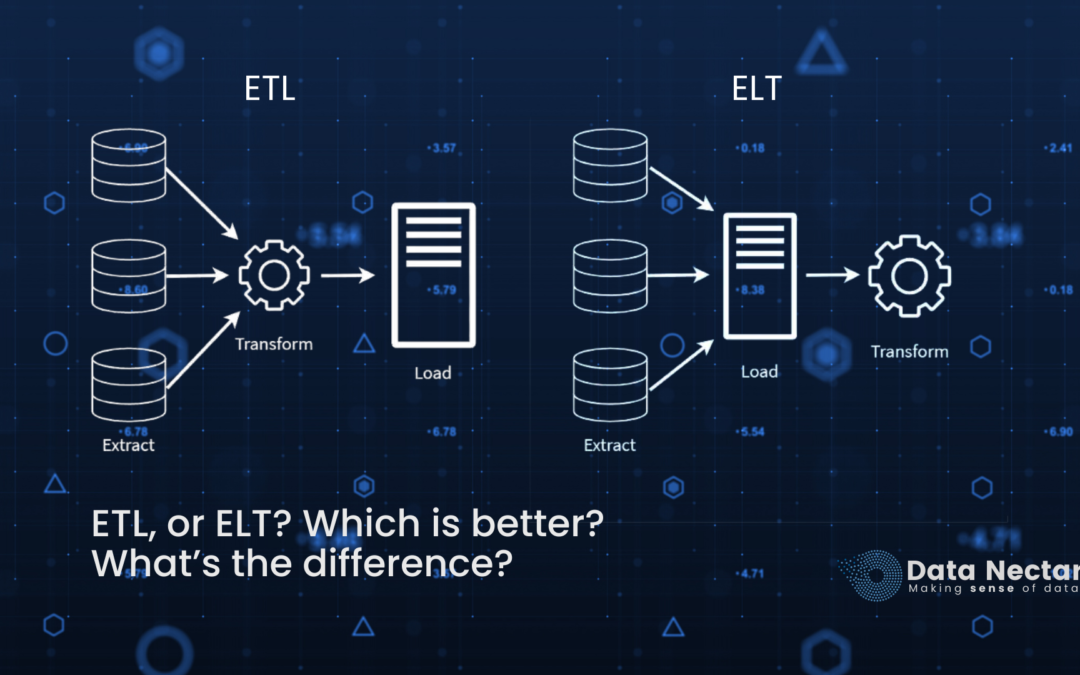

For that, it requires the data to be integrated in specific manners. And special methodologies are employed to integrate such a large amount of data. At present, the industry has adopted two types of data integration strategies to streamline multiple disparate information into one stable source of knowledge. They are called ETL and ELT. Though the end result is expected to be the same through both of them, applied methods are the distinguishing factor.

First, let us understand what data integration is.

Data Integration

As it implies, it means ‘the process of integrating various types of data from various sources in a ‘unified way’ so that it can be used for the subsequent systems or for advanced analytics. Integrated data can be located at a central source for multiple users and stakeholders to derive optimum performance from their data operations such as queries, and minimize the load on the data source.

It’s used for analytical purposes, sending the results to databases, data warehouses, or data marts, and organizing the data in specific analytic formats like star schemas, multi-dimensional, etc. Data integration also includes enhancing the data inside an application such as CRM and bringing together all the data need for a new downstream application.

Data integration processes

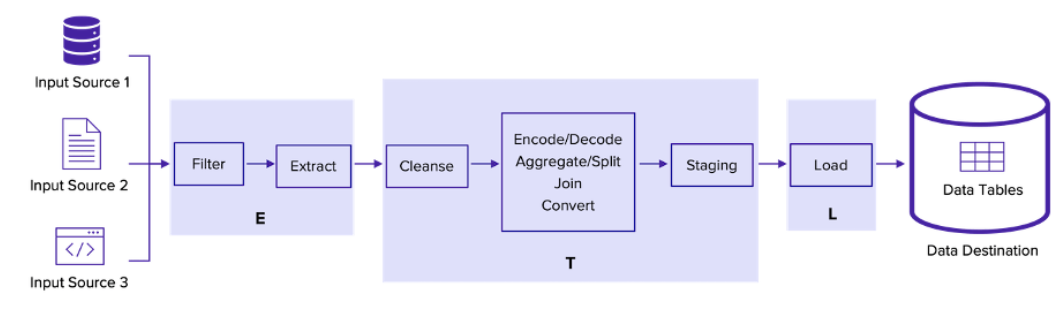

Data integration involves three key processes

- Extracting the data,

- Transforming it into a well-defined unified view, and

- Loading the unified data into the destination.

Very rarely all three operations consist of one single process. Most of the time all three steps run separately. The extraction is always the first process. But Loading, and transforming steps can be executed in two different orders. Therefore sometimes it is ETL, and sometimes ELT.

Both processes have their distinctive advantages. We’ll understand the difference between ELT and ETL later in this article. But first let’s define each method the E, the T, and the L.

Extraction

Raw data is pulled from a source or multiple sources such as transactional systems, CRM data, or ERP data, or IoT sensors that collect readings from various source points. The extracted data can be in multiple formats, such as CSV, relational databases, MS Access files, XML, JSON, etc. Typically it involves using SQL for extraction. Generally, the extracted data is in an unstructured format.

It’s an accepted practice in the ETL processes that the data is temporarily placed into a staging repository.

Transformation

In this process the schema is employed and the data is reorganized, reframed, reshaped, reordered, reconfigured, and updated to match the business requirements and data storage solution needs.

It can include filtering, pulling in data from other sources, and other processes, converting various data types into one single format, cleansing the data by removing inconsistencies and duplicate entries, validating, and authenticating.

The calculation, conversions of the raw data headers as required by the destination’s needs, converting of measuring units and currencies, as the BI process demands, analysis or synthesis, making it compliant with government regulations, etc also take place at this stage and also deleting inaccurate data raws (this can also take place during the extraction process,) blending, enrichment, aggregating or combining data elements from multiple data models.

During the process, the data is also run through a cleansing process to prevent the inclusion of bad or non-matching data in the destination repository. A set of predefined rules and functions are also applied.

Additional transformation also must be done to clean and model data before it can be practically useful for other analytics applications.

Loading

Loading is a process that delivers the transformed data into the destination data store such as a data warehouse, data mart, analytical database, or OLAP engine, and secures it for sharing (It is also a possibility that some of the final reorganizations of the data can be defined and coded during this step.)

This step makes the data business-ready and makes it available to internal and external users. The destination’s existing data can also get overwritten as a result of this process. This possibility can be handled in advance prior to executing the loading process.

With these three processes, the data integration can be conducted in two ways ELT, and ETL. In the ELT methodology, data is copied from the source directly into the data warehouse without much alteration. In the ETL methodology data is transformed into a suitable format before it’s loaded into the warehouse.

We will look into these processes in detail later in this article.

Similarities in both processes

- Both ETL and ELT allow the consolidation of data from multiple sources into a single repository with data that is formatted in a predefined way.

- This single unified data repository makes simplified access for analysis and additional processing possible.

- It also provides a single source of up-to-date knowledge ensuring the consistency of all enterprise data.

What is ETL?

ETL is a data integration methodology in the order of Extracting, Transforming, and then Loading.

The raw data is first copied from the origin, then cleansed and restructured/reformatted in a ‘staging area’ during the transform phase, and then moved into the data warehouse or pushed forward.

The ETL workflow is linear in nature and it pairs well with relational data warehouses. It requires data transformations to enforce strict schema and data quality before loading to the destination.

At the same time, ELT is best compatible with data lakes that accept structured or unstructured data.

ETL Use cases

The ETL data integration is useful in these kinds of scenarios…

-

- Where the data is being real-time extracted from the AI-enabled devices for machine learning.

- To integrate and analyze the real-time influx of marketing data.

- To integrate and process the IoT data collection.

- Replication of multiple databases in real-time for more than one destination.

- Real-time migration of the databases to the cloud.

When to use ETL:

The most common use cases of ETL software in the enterprise sector are Data Warehousing and Data Migration.

Data Warehousing.

A data warehouse is a database with data from multiple sources combined in a fashion that can be collectively analyzed as per the needs.

Data Warehouse is a well-organized environment where a large amount of database is housed. Prior to the loading of the data onto that, it is taken through many of the operations we talked about in one of the above sections, and transformed. Once it’s loaded, it becomes a ‘single point of reference for the business.

Quality, accuracy, and consistency are vital factors to build a data warehouse. The ETL tool does that whether it is employed on-premise, or in a cloud data warehouse so that the subsequent processes can be executed effortlessly.

Data Migration

ETL is also used to upgrade databases or transport data from a legacy system to a modern one.

The main challenge against data migration is a mismatching data structure and table format. The ETL tool can iron out these disparities with its modern enhanced abilities and ensures compatibility of the source data (the format, structure, and scheme) with the target system.

What is ELT?

This process is first to extract, then load the data, and transform. It is relatively a newer method for achieving the integration of data from across an organization and preventing uncontrolled data duplication.

Data is extracted from its origin, loaded into the datastore, and then taken through the pre-defined business logic to get transformed “as and when required.” The transformation will typically happen on an as-needed basis, while in the ETL methodology, all data is transformed first before it is stored.

ELT Use Cases

ELT is generally used in real-time high-volume data environments such as stock exchanges, large-scale distribution networks, industrial processes, and anybody who need real-time access to updated data and business intelligence for instant decision-making.

The most common use case of ELT software in the enterprise sector is…

When to use ELT?

Optimization of the pushdown process

In an ELT process, data is fetched, loaded onto the database, and then taken through the transformation processes. For high-volume data, this process is preferred. It prevents the server from getting overburdened because the processing is performed in the database.

ELT’s greatest strength is its speed and support for diverse data types.

Other Applications when ELT is a better choice

-

-

-

- When data needs to be stored at high speed.

- When the data integration process requires to be flexible and data needs frequent formatting.

- When the amount of data to be processed is large.

- When the raw historical data has to be retained for future analysis. If the business needs to analyze data trends, old data is required. ELT keeps old raw data and doesn’t require reloading.

-

-

Main differences between ETL vs ELT

The major difference between ETL and ELT is the order of the events through which data integration is accomplished.

ETL

- Using ETL is a preferred option when on-premise data needs to be structured before being uploaded onto a data warehouse.

- This method works efficiently when datasets are not large because large datasets will consume more time and computing resources.

- Also, the process and parameters are predefined for the transformation phase.

ELT

- At the same time ELT is preferred when the data volume is large.

- Also, it’s implemented in an environment where the storage is large and adequate computing power available.

- This allows the data lake to efficiently store and transform data as required.

- The format of data can also be modified at short notice in ELT however running of such queries can certainly add up that much time to the process.

- In ETL queries can be applied right away on the data after loading.

Comparison of the Benefits of ELT over ETL

| Comparison parameter | ETL | ELT |

| Flexibility | Intended for structured relational data | Structured as well unstructured data allowed on cloud-based data warehouses |

| Accessibility | Supported, maintained, and governed by the IT teams. | Easy for the users to access and use. |

| Scalability | Resource intensive, local, and limited availability. | Cloud based SaaS, is available to a wide range of users. |

| Loading time | Takes longer to load as it comes through the staging area for the transformation. | As it copies raw data as it is onto the destination, it loads it quickly. |

| Transformation time | is generally slower. Dependent on the size of the datasets. | Not dependent on the data size. |

| Maintenance | Less time required. If the transformation doesn’t meet the business needs, the data has to be re-sourced and re-loaded. | Additional transformation can be performed as the original data is intact and already loaded. |

ETL vs. ELT: Pros and Cons

It’s clearly context-dependent so there isn’t a verdict for which one is better than the other. Both data management methods have their distinctive advantages and shortcomings. Let us review them one by one.

Advantages of ELT

-

- More effective in a real-time data scenario.

- Preferred choice to handle large data volume or big data.

- Preferred when the transformation process is complex.

- Can work with schema-less data such as No-SQL, Mongo DB, and Cosmos DB.

- In terms of flexibility of data formats, ELT can ingest data in any format, even when paired with a data lake. The data lake even accepts unstructured data.

- The transformation can take place as and when needed. This can keep the source compute system free from large storage needs and heavy processing leaving the storage space available for other functions and doesn’t much consume the processing power.

- ELT loads all the data to the data lake making it available anytime. That allows the tools that don’t immediately require structured data to interact with the loaded data instead of waiting until it’s transformed.

- Loading speed is better because the process of transformation happens separately. In addition, the raw data is loaded into the data lake in real time which provides immediate access to information.

- Implementation speed is better because the transformation is performed as and when required. ELT can be quickly applied to new sources of data and new information can be uploaded onto the data lake while the queries are being written and the data is analyzed.

Limitations / Shortcomings of ELT

-

- Compliance issue: The legal authorities may not allow the companies to store certain kinds of data. This can be a deterrent as ELT requires accessing and extracting from the raw data. Also, as ELT is integrated with the cloud, some regulations can prohibit their information outside the designated premises or network.

- It’s relatively a newer approach and therefore the number of professionals who can provide the service is however on the rise, it’s still inadequate than the market demands.

- ELT is efficient only if paired with the storage and processing power of the cloud. That makes it cloud-dependent.

- The analysis can take a long time as data is taken through a pre-defined business logic for transformation. This doesn’t allow large volumes of unstructured data to be inspected at a moment’s notice.

Advantages of ETL

-

- ETL’s greatest strength is its structuring of data for more in-depth analysis and examination.

- ETL can be a better choice if the business needs quick analysis of data as it is a two-step process. Once the data structure is defined and transformation executed with ETL, the queries are rather efficient. That can produce faster analysis.

- It’s easy to comply with legal regulations as any piece of data doesn’t need to leave the source data point.

- Compared to ELT ETL is more secure because security checks can be performed or data can be encrypted at the source itself, and only the pieces of information which are necessary can be brought out.

- ETL is adaptable to either an on-premise environment or a cloud-based. Data can be taken from on-premise systems and uploaded onto a cloud-based datastore.

- ETL can address the data location-related compliance issue that ELT can not. When companies are abiding by data confidentiality and privacy regulations, ETL removes, masks, or encrypts sensitive data before it’s loaded into the data warehouse.

- ETL is quite more mature than ELT. It has been used for over two decades. That means the availability of experienced exponents for ETL implementation operations. That can also mean more ETL tools in the marketplace to build data pipelines.

Limitations / Shortcomings of ETL

-

- The loading speed can be slow as it spends time in the staging area to perform transformations on the data so it may not be available quickly enough in the datastore while in ELT data is available instantaneously as it’s extracted.

- ETL can be quite a resource-consuming process at the source in terms of the space it occupies on the local hard drives and also the local computing power.

- The ELT workflow lacks agility. If the data structure of the new queries or analysis does not match that in the warehouse, the entire transformation process and schema of the data warehouse will need to be modified.

- Data volume limitations. ETL is not quite efficient to handle large volumes of data given the time it takes in transformation. It’s best suited for smaller data sets.

The future prospects of ELT

It seems that ELT is becoming the new standard process and there seem quite a few reasons to believe that.

Source system reasons

-

- The source data is getting larger from all big data sources and multiple other sources.

- Unstructured or semi-structured data need complex transformations to make sense.

Target system reasons

-

- The target systems are getting more mature in the new era. They are getting more and more robust and scalable for their storage space expansions and computation power.

Design and application choice reason

-

- Data model design changes can be kept open to modifications.

- ETL is the best choice for data science and machine learning.

- It’s also the best choice for the data from IoT devices, all kinds of sensors, and server logs.

The future prospects of ETL

There is a prevailing skepticism that ETL may soon become obsolete. However, it’s far from the truth. This belief is held by the relatively new data professionals because of their subjective ‘recency bias’ and their deep desire to be considered as the adapters of the new technologies.

Not denying that there do exist some alternative systems such as Hadoop or Spark that can serve the specific data management & integration needs. They can store large amounts of data on quite conventional hardware and are easy to scale up so they can be ideal for large data repositories. They can also quite efficiently store structured and unstructured data parallelly.

However, there are reasons to believe that the ETL approach will still remain relevant in the time to come. Some of them are as follows. Some of the new technology features bring a great advantage to the process. They are…..

-

- More Efficient Storage Usage

- Fast and Accurate Analytics

- Next-Gen Transformations

- Privacy, Security, and Compliance

- In addition to that, the AI-powered interfaces and extensive integration libraries make modern data platforms such as integrate.io rather powerful.

Modern ETL is cloud-based, automated, and can be customized in synch with your business. There are also some trends like…

-

- real-time streaming

- ETLT (an innovative sequence of extract, transformation, load, and then detailed further transformation,) and also a new concept of

- Data Lakehouse which combines a data lake that supports virtual warehouse structures to hold integrated data supported by AI and other modern ETL processes.

Therefore we see no reason for the ETL approach to get obsolete in the near future and believe that it will continue to thrive as it has been for over four decades.

How can Data Nectar support you to transform your data into a profiting asset?

This is an era when a large amount of data from various sources has become an inevitable part of the business. Integrating it, managing, organizing, and interpreting it in numerous ways is although an arduous task, and a daunting challenge, it’s vital and inevitable.

Because the quality of such analysis has a direct impact on the quality of your data-driven business decisions.

Therefore it is more than important that all the data operations are conducted by those who thoroughly understand every business process, and have the prowess to create multi-dimensional visualization of any data.

Data Nectar is the answer to your search for such a partner. We are here to support you in making maximum sense of your data asset through…

-

- Data strategy,

- Business Intelligence,

- Data science and analytics, and

- Application engineering

And keep your data safe, and steer your data-driven decisions.

So feel free to call on +91-79-46034308 or drop a line at info@data-nectar.com to discuss your data management and integration needs.